HPC Web Portal

The HPC Web Portal lets you launch jobs on our HPC clusters through a webpage. You have to have access to the clusters to do so. If you don't, mail Wes (w.hinsley@imperial.ac.uk) to get started. Then, login to the portal here. You may get a security warning pop up about the certificate for the page; this is because of a limitation of Imperial College's certificate. The bottom line is: it's safe to proceed. If you're getting this error on a computer connected to Imperial, then you may need to install the College's root certificate - links for linux, Mac, or windows.

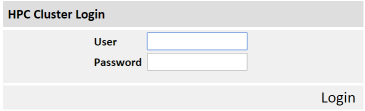

Logging in

Just type your DIDE name and password. It will tell you if your user/password is wrong, or if you don't have access to anything useful on the cluster - in which case, mail Wes (w.hinsley@imperial.ac.uk).

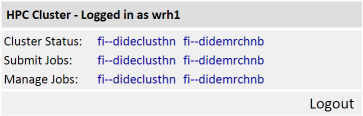

Main Menu

Depending on what you have access to, you'll see one or more clusters here, and for each one you can have a look at how busy the cluster is (Cluster Status), Submit jobs to that cluster, or manage jobs you've previously submitted.

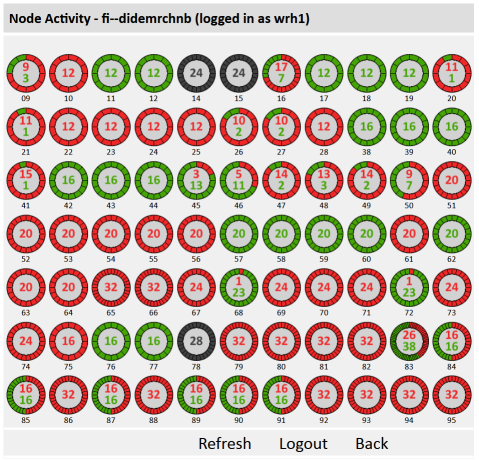

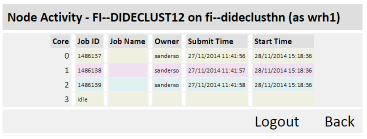

Cluster Status

Here you'll see the nodes on the cluster, and how many cores each has. Red squares are cores that are in use, and green squares are currently idle cores. You can click on the name of a node that's busy, and see what jobs are current running on that node, who owns them, and when they started.

Submitting Jobs

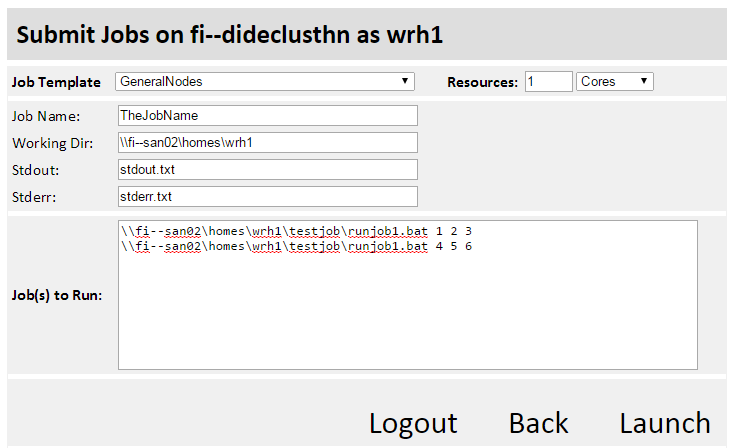

The job template determines which nodes the cluster will try to run your job on. Different users may have access to different job templates, depending on which nodes are most appropriate for the jobs. If you want to check up on whether you have the appropriate resources for your jobs, talk to Wes. So first select your job template and how many cores, or nodes your job requires. Note that you should only select a number of nodes, if your jobs use MPI. If you want to run 100 cores worth of jobs, then actually, you want to submit 100 jobs, each of which use 1 core.

The middle section (Job Name, Work Dir, Stdout, Stderr) is not critical. In the example image, I give my job a name, set a working directory, and stdout and stderr (output to the console, and any errors to the console) will be sent to the files I've specified, in the working directory.

Lastly, paste a list of jobs in the box at the bottom. These are the commands you want the cluster to execute.

Managing Jobs

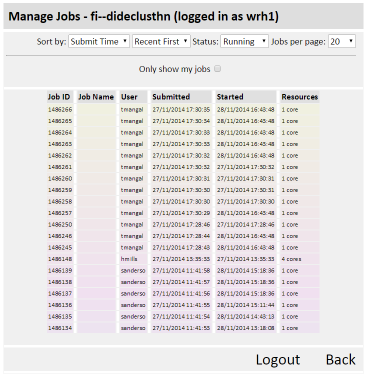

Here you can search through jobs on the cluster. Jobs can be in various states: Queued, Running, Finished, Canceled, or Failed. A different list comes up depending on the status you select. You can then sort by Job Name, User, or various times depending on whether you are looking jobs that have been submitted, started, or finished in some way. You can also ask to only see your own jobs. If you have jobs on the cluster.

Canceling Jobs

If you have jobs currently running on the cluster, tickboxes will be next to each job, and a cancel button at the bottom will cancel those jobs.

Notes for Windows Job Manager Users

If you're using Windows Job Manager, all the jobs submitted by web users will appear as if they've been submitted by "Apache-WebHPC". If you want to see the real owner of the jobs, right click on the column headers, and ask it to show you the field "Run As" instead.

Disclaimer

This is a very new bit of software, released quite early as it may be very useful even in its early stages. Thanks to those who helped me test in the early stages - and please let me know any problems you come across, and features you'd like. I have many ideas for features, and not enough time - but I'll reorder my to-do list if there are things you'd like first.