HPC Web Portal

The HPC Web Portal lets you launch jobs on our HPC clusters through a webpage. It's our own in-house software, so bug fixes and feature requests can be worked on in a faster turnaround than is often the case (subject to how busy I am with other things!) You have to have access to the clusters to do so. If you don't, mail Wes (w.hinsley@imperial.ac.uk) to get started. Then, login to the portal here.

Logging in

Just type your DIDE name and password. It will tell you if your user/password is wrong, or if you don't have access to anything useful on the cluster - in which case, mail Wes (w.hinsley@imperial.ac.uk).

Main Menu

Depending on what you have access to, you'll see one or more clusters here, and for each one you can have a look at how busy the cluster is (Cluster Status), Submit jobs to that cluster, or manage jobs you've previously submitted.

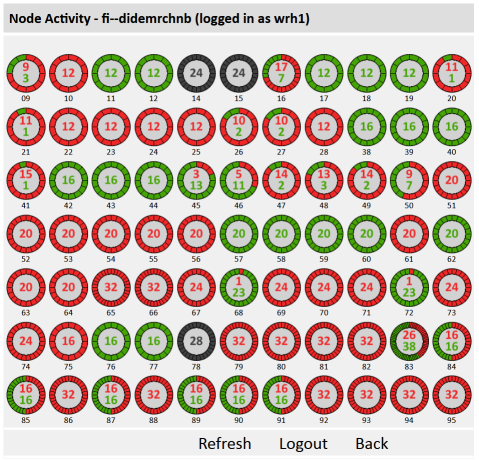

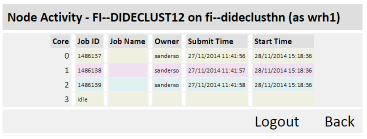

Cluster Status

Here you'll see the nodes on the cluster, expressed as pies. Or cakes if you prefer, Red slices are cores that are in use, and green slices are currently idle cores. Grey means a node is out of general use at the moment - either some fault, or it is reserved for some dedicated offline project use, which happens from time to time. You can click on the name of a node that's busy, and see what jobs are currently running on that node, who owns them, and when they started.

Submitting Jobs

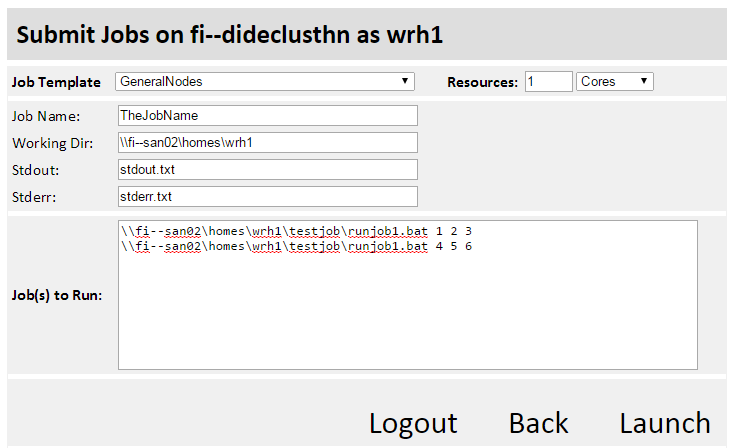

The job template determines which nodes the cluster will try to run your job on. Different users may have access to different job templates, depending on which nodes are most appropriate for the jobs. If you want to check up on whether you have the appropriate resources for your jobs, talk to Wes. So first select your job template and how many cores, or nodes your job requires. Note that you should only select a number of nodes, if your jobs use MPI. If you want to run 100 cores worth of jobs, then actually, you want to submit 100 jobs, each of which use 1 core.

The middle section (Job Name, Work Dir, Stdout, Stderr) is not critical. In the example image, I give my job a name, set a working directory, and stdout and stderr (output to the console, and any errors to the console) will be sent to the files I've specified, in the working directory.

Lastly, paste a list of jobs in the box at the bottom. These are the commands you want the cluster to execute.

Managing Jobs

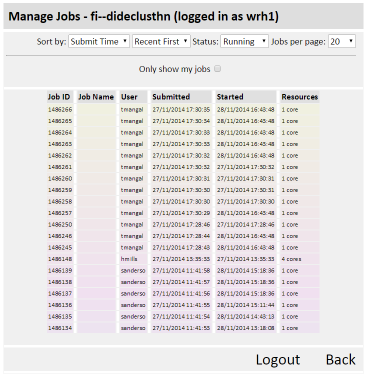

Here you can search through jobs on the cluster. Jobs can be in various states: Queued, Running, Finished, Canceled, or Failed. A different list comes up depending on the status you select. You can then sort by Job Name, User, or various times depending on whether you are looking jobs that have been submitted, started, or finished in some way. You can also ask to only see your own jobs. If you have jobs on the cluster.

Canceling Jobs

If you have jobs currently running on the cluster, tickboxes will be next to each job, and a cancel button at the bottom will cancel those jobs.

Notes for Windows Job Manager Users

- If you're using Windows Job Manager, all the jobs submitted by web users will appear as if "Apache-WebHPC" is the owner of the job - which is true, but not useful. If you want to see who submitted the jobs, right click on the column headers, and ask it to show you the field "Run As" instead.

- Also note that jobs launched through the web portal can only be cancelled through the web portal. The reason is connected to the above: the command-line Job Cancel will only do what you ask if it thinks you are the owner of the job. In the web portal I handle this and work out who you are, but MS's command-line tools don't.

How it works

The webpage is written in simple PHP with Javascript. PHP validates your username against the domain controller (very easily), and looks up your groups to see which clusters and job templates you should have access to. The site is served through HTTPS to keep this login process safe, and your login status is cached in an encrypted way on the server, so it only gets sent once per session, not every page visit.

The early test versions then worked by calling the standard MS-HPC command-line tools such as "job" and "node" and parsing the results. It has to do this by operating as a user called Apache-WebHPC, which has curious and very specific user rights to do only what we want it to. The inconvenience of that is that jobs get submitted with "Apache-WebHPC" as the owner (rather than you owning the jobs) - your username goes in a different field called "RunAs", which isn't returned by "job" and "node". There were other difficulties; to get the submitted time for a list of jobs, I'd have to call "job" once per job, which turns out to be much too slow to generate a nice webpage with.

Luckily, it's easy to write an alternative "job" and "node" command alternatives in C-sharp, using the freely downloadable HPC SDK, which comes with nice examples. So a new version of "job list" does all the querying and returns the fields for the webpage in one go. And really, that's about it. No rocket science here.

Disclaimer

This is a very new bit of software, released quite early as it may be very useful even in its early stages. Thanks to those who helped me test in the early stages - and please let me know any problems you come across, and features you'd like. I have many ideas for features, and not enough time - but I'll reorder my to-do list if there are things you'd like first.

Version History

In the style of R, each release has a deeply profound codename, representing the state of the software and/or author and/or universe/other at the time of release. The current version information is in the line of text at the bottom of each page.

| Version | Code Name | Date | Details |

|---|---|---|---|

| 1.0 | Mental Sphincter | 23/11/2014 | First fully functional test release. Special thanks Jack for testing. |

| 1.1 | Rustic Flapjack | 25/11/2014 | Added the "just my jobs" tickbox, refresh buttons and auto-refresh (10 seconds idle).

Fix bug in HPCJobLister.exe viewing Queued jobs. |

| 1.1.1 | 04/02/2015 | Fixed bug when system "Remote Commands" appear in the job lists. (They don't have all the fields we want to display). Thanks Francois for spotting/reporting. | |

| 1.2 | Midnight Rooster | 12/02/2015 | Click on failed jobs for job info. |

| 1.2.1 | 03/07/2015 | Fixed total cores in use under-reporting for jobs with very short command-lines. | |

| 1.3 | Cactus Disaster | 10/09/2015 | Rewrite secure login. |

| 1.4 | Hilarious Misunderstanding | 20/11/2015 | Tighten up a number of security issues. (Thanks Rich).

Allow for job dependencies (launched job waits until other job(s) finish) - see submission page. Cluster Status now shows offline cores in grey. |

| 1.5 | Inductor Penguin | 19/05/2016 | Redirection security fix. (Gulp). |

| 1.5.1 | Turbo Inductor Penguin | 14/11/2016 | Circumvent slow exec() from PHP - List Cluster Nodes is much faster now. |

| 1.5.2 | Complex Pavlova | 28/09/2018 | Clean-up cluster status, as having to scroll it was getting on my nerves. |